Latest update: May 20, 2025

The “Submitted URL has Crawl Issues” error is a really confusing status. It implies that Googlebot’s request to crawl your pages was unsuccessful.

This error doesn’t fit into other categories that cause problems. So, identifying what triggered it can be problematic.

Keep reading and learn how to resolve this problem!

Causes for the “Submitted URL has Crawl Issues” Error

The “Submitted URL has Crawl Issues” appears if Googlebot can’t crawl a URL from the sitemap you submit. Search engines can’t index your page and it’s not visible to users as a result.

As we’ve said above, this error is tricky. It’s not always clear what causes it, but we gathered some possible reasons.

Server Errors (5xx Status Codes)

Server errors appear when the web server hosting your site cannot properly process Googlebot’s request. These errors fall under the 5xx HTTP status codes. They usually disrupt Google’s crawling process.

There are different types of 5xx errors.

For instance, we have a 500 error. It occurs when an unexpected condition interrupts the operation of your server. The main causes include issues with your

- Code;

- Plugins;

- Server configurations.

You’ll receive a 502 error message if the gateway server detects an invalid response. In case the server is temporarily down, you will encounter a 503 error.

Googlebot stops crawling your pages if you ignore these errors. As a result, they’ll be removed from the index.

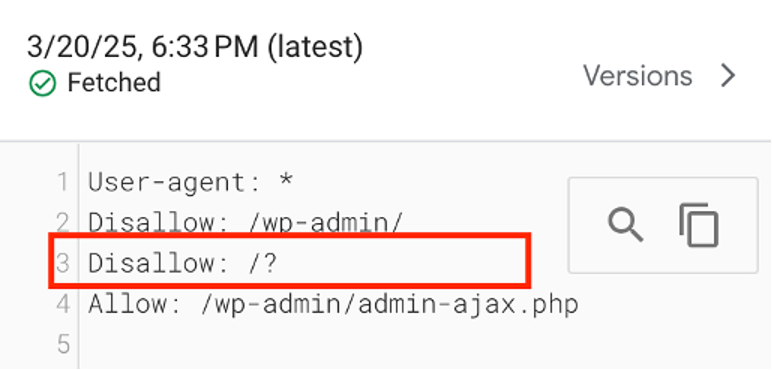

Blocked by Robots.txt or Noindex Tag

Restrictive settings in your robots.txt file or meta tags block Googlebot. These limitations often lead to the “Submitted URL has Crawl Issues” error.

For example, the Disallow directive in your robots.txt file can stop the crawling activity. Google won’t have the possibility to process your content.

The <meta name=”robots” content=”noindex”> tag also instructs Google to exclude your page from its index.

Incorrect Redirects

Redirects help you direct users and search engines from one URL to another. However, without correct configuration, they can cause crawl issues.

Typical redirect problems that lead to crawling difficulties are

- Redirect loops;

- Long redirect chains;

- Broken redirects.

404 or Soft 404 Errors

If the URL doesn’t exist, the server will respond with a 404 status.

A soft 404 occurs if a page doesn’t have valuable content but returns a 200 (OK).

Both cases can lead to the “Submitted URL has Crawl Issues” error. It usually happens if you change a URL without the appropriate redirect or unintentionally submit an invalid page.

DNS Resolution Issues

DNS problems arise when Googlebot can’t resolve your domain name. As a result, it can’t reach your site.

Here are the main causes for this issue:

- Incorrect DNS settings or misconfigurations;

- Temporary outages of your provider;

- Limiting firewall or security settings.

Insufficient Crawl Budget

Google assigns a crawl budget to every website. This budget determines how many pages it will crawl in a specific timeframe. If Googlebot reaches the limit, some pages may not get crawled.

This type of crawling issue usually happens to

- Large websites with thousands of pages;

- Duplicate or low-value pages;

- Sites with frequent server timeouts.

Blocked by Firewall or Security Plugins

Some security settings might block Googlebot by mistake. These restrictions can also lead to the “Submitted URL has Crawl Issues” error.

For example, certain firewall rules may treat Googlebot as a threat. DDoS protection services and security plugins sometimes block legitimate crawlers as well.

Fixing the “Submitted URL has Crawl Issues” Error

You may experience the “Submitted URL has Crawl Issues” error due to many different factors. So, you need to evaluate all possible causes to find the right solution.

We gathered all the steps you need to complete to settle this problem.

Step 1: Locate Affected URLs

First, you need to find all pages experiencing crawling issues.

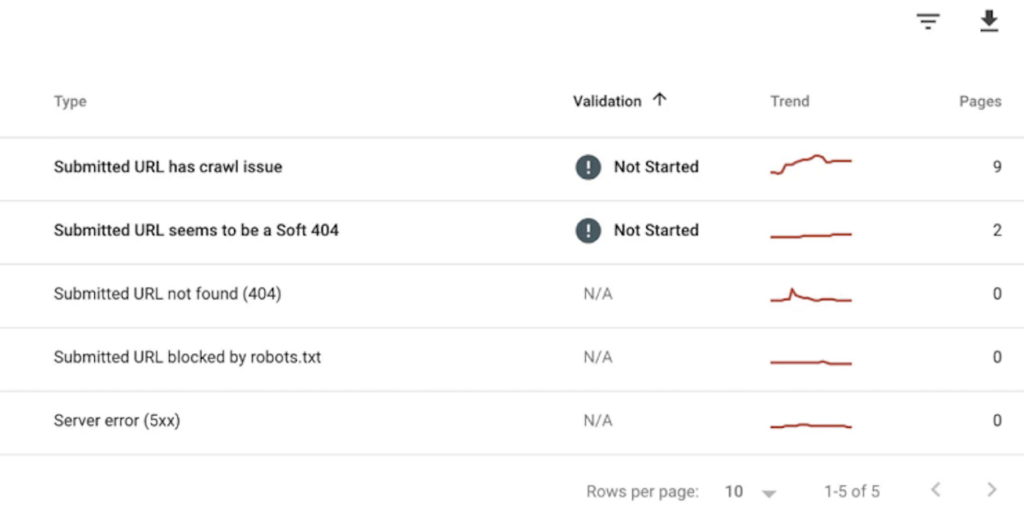

Log into your GSC profile and pick the site you want to analyze. Then, open the “Pages report” in the “Indexing” section.

This report displays all the URLs and their statuses. Plus, it has a list of links to all the affected pages. Keep scrolling until you find the message about the “Submitted URL has Crawl Issues” error.

Click on this alert to view all the pages involved. Export the list to use it for further diagnostics.

Step 2: Inspect the URL

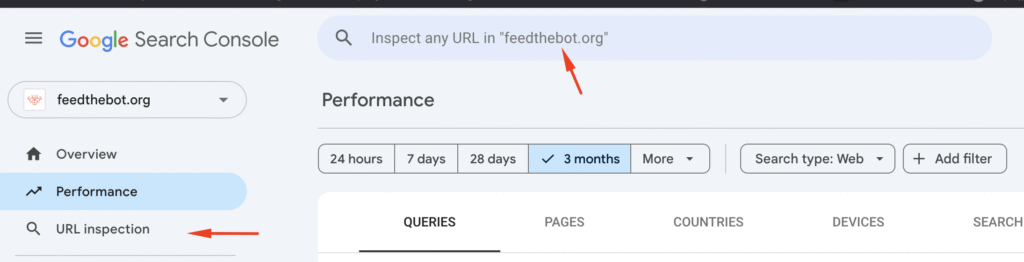

Before you make any changes, you need to diagnose the URL to find out what’s wrong exactly.

Use the URL Inspection Tool in GSC to analyze each problematic page individually. This tool will show whether the page is

- Indexed;

- Blocked;

- Facing accessibility issues.

Also, click on Test Live URL to check if the issue continues in real time. If the live test succeeds, the problem could have been temporary.

Step 3: Check Server Response

As we’ve noted above, problems with your server might lead to this error. So, you need to handle server-side issues as fast as possible.

Check your server logs for errors indicating

- Downtime;

- Overload;

- Firewall restrictions.

If you notice a high server load, consider optimizing your hosting plan or using a CDN to reduce strain. Also, make sure that your hosting provider is not blocking Googlebot requests.

Plus, you can use the Lighthouse tool to verify if Googlebot can access your pages.

Step 4: Adjust Robots.txt and Meta Tags

Sometimes issues arise because Googlebot is blocked from crawling the page.

You need to open your robots.txt file and check for any Disallow rules. Also, use GSC’s robots.txt tester to verify if Googlebot can access the page.

Next, evaluate the HTML source of the page for a noindex tag. If the page is mistakenly blocked, remove this tag or update the robots.txt file accordingly.

Step 5: Fix Broken Links or Redirect Issues

Your next phase is to fix the broken pages to restore the missing content if you deleted the page by mistake. Also, set up a 301 redirect for outdated resources to the most relevant working page.

Next, test your redirects with checker tools to confirm they are functioning correctly.

If the page should remain removed, return a proper 410 Gone status instead of 404 to inform Google about deindexing.

Step 6: Review Page Load Speed

Slow-loading pages or mobile accessibility problems might also lead to the “Submitted URL has Crawl Issues” error.

You need to determine and fix any slow-loading elements. Here are some measures you can take to improve page speed:

- Compress images;

- Optimize the scripts;

- Remove unnecessary redirects.

Also, try to avoid elements like pop-ups or interstitials that block access to content. They can cause crawling difficulties.

Ensure proper lazy loading settings so Googlebot can fully render the content.

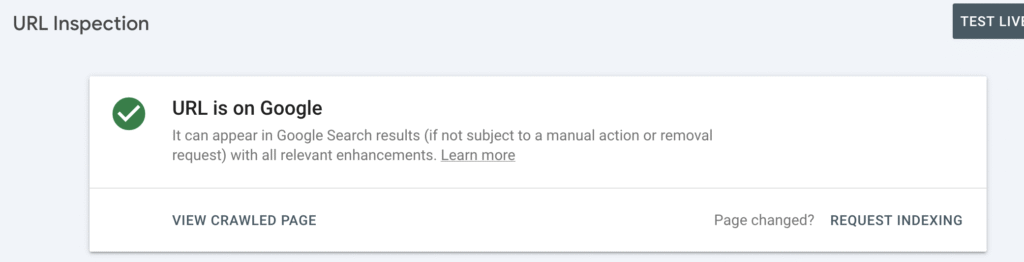

Step 7: Resubmit the URL for Indexing

After you apply all the fixes, validate them within GSC. Then, open the URL Inspection tool again to test if your modifications were successful. Press the “Request Indexing” button to make the processing faster.

Wait a few days and monitor the Pages report to confirm the error disappears. If the issue persists, double-check for any problems and repeat the process.

Step 8: Update the Sitemap

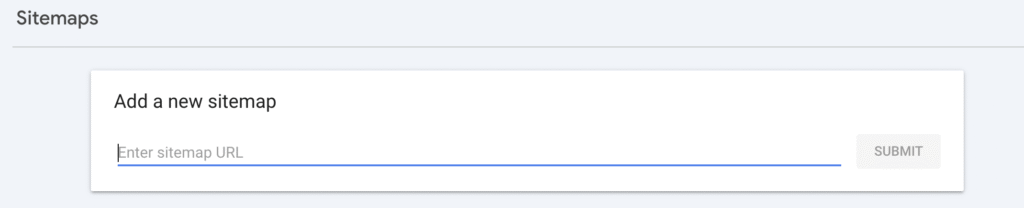

The last thing to do is upgrade your sitemap to prevent indexing problems. You can use GSC’s Sitemaps tool to resubmit the updated version.

Regularly audit your sitemap and remove any URLs that are no longer valid. Also, we recommend using automatic updates if your site frequently changes.

Key Takeaways

- The “Submitted URL has Crawl Issues” error can be really tricky and confusing. It appears if Googlebot has problems crawling your site;

- Different factors might lead to this issue, including DNS problems, security limitations, or server errors. So, it’s hard to diagnose and analyze.

- To fix this problem:

- Locate affected URLs;

- Check your server response;

- Modify the robots.txt file;

- Settle any broken links or redirect issues;

- Fix slow-loading elements;

- Request indexing and update the sitemap.

If you encounter other errors, refer to our Google Search Console guidelines to resolve them.

FAQ

- What is a crawl issue?

A crawl issue appears when Googlebot can’t properly access or index your pages. It usually happens because of server problems, broken links, or blocked resources.

- What should I do if the URL returns a server error (5xx)?

You should fix the server overload or misconfiguration and ensure the page is accessible. Then, request a re-crawl in GSC.

- Can I fix a “Submitted URL has Crawl Issue” error by resubmitting the sitemap?

No. You need to identify and fix the root cause first, then you can resubmit.